How to get (and modify) file comments

If you use "Get Info" in macOS, you can edit a comment for the item (file or folder). If you copy the file elsewhere, the comment it copied along.

Now, programmers like me sometimes want to be able to inspect or even modify the comment. For instance, Find Any File lets you search for comments on any disk (volume), so it has to read these comments.

Unfortunately, macOS's operations do not offer a convenient way to access these comments. The safe way is to use AppleScript, especially if you want to alter the comment (see StackOverflow: How to read file comment field and StackOverflow: Upload Comments to File Metadata "Get Info" Mac Command Line).

Now, if you only want to read the comment, using AppleScript is relatively slow. For a program like Find Any File that would search entire disks, this could take hours when it has to query the comments via AppleScript for millions of files.

The other method other people have proposed is to read the comment from an extended attribute (EA), which is much faster.

FAF does this now, and it seems to work quite well.

Until someone contacted me and said that FAF manages to find most but not all comments. After a bit of investigation I found that the comment stored in the EA was different from the one that Finder shows in Get Info! And that's not good for finding comments fast and reliably, at all.

The customer was kind enough to help me investigate this and here's my findings:

How macOS stores file comments

macOS may store the comment for a disk item in these places:

- In the EA attached to the file.

- In the hidden .DS_Store file in the same directory as the file in question.

- In the Spotlight database (this is optional)

It appears that Finder's Get Info, as well as its AppleScript's comment property accessor function, access the .DS_Store file directly to read or alter the comment, and if that happens, it also appears to update the EA alongside, while the Spotlight data gets updated whenever a file change is detected.

How can the comments get out of sync?

That happens due to an apparent bug in Finder or its services. Here's how to reproduce it:

- Set a comment for a file, using Get Info in Finder, e.g. set it to "original".

- Copy the file to a different location, e.g. into a folder next to it, keeping the original file name.

- Modify the comment of the copied file, e.g. to "changed".

- Copy the copied file back to the original location, choosing to replace the existing.

- Now check the comment in Finder's Get Info: It'll show "changed", which is the intended result.

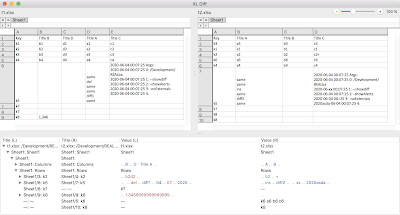

- Now check its EA. In Terminal, use:

xattr -px com.apple.metadata:kMDItemFinderComment /path/to/original_file | xxd -r -p | plutil -p -. This may show either an empty string or the old comment "original", but should instead be "changed". - Even worse, Spotlight's comment may also be wrong. Check with:

mdls /path/to/original_file | grep kMDItemFinderCommentThe Spotlight comment may automatically update later, though, e.g. when you open the file or modify it.

(BTW, I suspect that this can also happening when you copy the file in Terminal or other methods that do not involve Finder, though then the result would be the other way around: The EA might be copied to the replaced file location, whereas the .DS_Store file won't get updated.)

Later I found an even easier way to mess up the comment:

After setting the comment with Get Info, rename the file. Check again with Get Info, and you'll see that it still has the comment, but if you now look at the EA, it'll show an empty comment.

So, there appears to be several bugs in Finder's copy operation (I've verified this in both macOS 10.13.6 and 15.4, so it's been around for a while):

Two kind of bugs

- Replacing or renaming a file should transfer the EAs but doesn't. Maybe Apple has reasons to keep some EAs of the replaced file, but the comment surely isn't one of them - the comment belongs to the file being copied.

- Copying or renaming a file that replaces another should also trigger an immediate update to the Spotlight importer, making sure it records the new comment. Or maybe Finder even does trigger the importer, but then there's a race condition bug that makes the importer read the outdated or yet non-existing comment in the .DS_Store, with Finder being too slow to update the comment in the .DS_Store in time.

That's my findings so far. I'll file a bug report with Apple but have little hope that this will get addressed, as I've file related bugs in this area before and nothing happened.

What does this mean for apps that search for file comments?

The only reliable way to get a file's comment is to use the slow AppleScript method right now (or read directly from .DS_Store, but that's undocumented and may break any time, because the file's format is private to Apple). Which means I might have to update Find Any File to use the much slower AppleScript method.

But for now, I'm working on a "matching script" for Find Any File that can identify and fix these out-of-sync comments. If one runs this script once on all volumes (which may take a while), the EAs would be up-to-date and FAF could search them quickly.